5 Machine Learning and Other Scientific Goals: A Clash

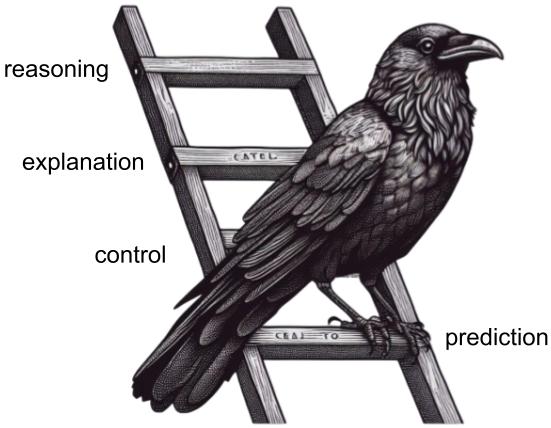

Okay, so the use of machine learning in science can be justified by its predictive power, and prediction is an important scientific goal. All scientists aboard the machine learning ship! Right? While machine learning has been around for years now, why do scientists still have a bad gut feeling about machine learning? In its raw form, supervised machine learning clashes with three other essential goals of science that go beyond prediction: controlling, explaining, and reasoning about phenomena [1], [2].

The great success of tornado prediction increased the number of machine learning supporters. But many voices remained critical. The old veterans, in particular, argued that science is about explaining why things happen, not just making predictions. Once again, the Elder Council of Raven Science ended in a heated philosophical debate: Does supervised learning lead to a science we don’t understand and can’t control?

5.1 Prioritizing prediction over other scientific goals

We believe that the main reason why some scientists are suspicious of machine learning is the exclusive focus on prediction. Bare-bones machine learning readily trades a tiny gain in predictive performance against any other goal scientists have, such as controlling, explaining, or reasoning about phenomena. A supervised machine learning model will take any short-cut to improve predictive performance:

- If given non-causal features that are associated with the outcome, the machine learning algorithm will likely learn a model that relies on them. For example, a machine learning model may rely on the number of sold winter jackets to predict flu cases.

- If the training data are biased, the model will reflect these biases as it helps in predicting the biased data.

- If a complex model has a better predictive performance than an interpretable model, even marginally, the model selection step will spit out the complex model as the winner.

An absolute framing of a question in terms of prediction drowns out other goals. Depending on the context, these goals may have higher importance than prediction.

5.2 Understand action effects to control phenomena

Imagine you study education science. Your data analysis shows a strong association between the academic success of kids and the education level of their parents. In Germany, for example, only 8 of 100 students coming from a working-class family receive a Master’s degree, whereas 45 of 100 students with academic parents do so [3]. Indeed, this association allows you to make predictions. Tell me where you come from and I’ll tell you where you’ll end up. Do you come from a working-class family? The prediction model says: “No Master title for you!”

Focusing on prediction is deeply unsatisfying in this context. Ethically, we as a society do not want this disparity between kids from the working class and kids from academic families – we believe in the equality of opportunity. Instead of predicting academic success, we want to find policy interventions to close the gap. How to encourage more educational climbers? What are the factors that hinder working-class children from pursuing a Master’s degree and how to change them? Would an increase in student stipends lead to more students from working-class families?

All these questions are concerned with control. Control is the ability to perform informed actions on a system and estimate the consequential dynamics of the system. For control, we must:

- know the causal factors, know how to act upon them, and also be able to do so

- evaluate the uncertainties in the consequences of actions

- analyze time dynamics and potential long-term equilibria

Let’s say the German government decides to increase the financial support for students, known under the beautiful German term Bundesausbildungsförderungsgesetz, short BAföG. They must be sure that a lack of money is one causal factor that prevents students from studying (this is indeed true, see [3]) and have money to increase financial support. The government should be aware of which effect the increase has: is it eaten up by inflation? Can it get more working-class kids to academic degrees? How long will it take for the increase in financial support to be enacted and when will it impact student’s choices and how?

Scientific models have always been a way to enable control over a system. They represent the system, allow you to run simulations, and thereby think about the outcomes of actions. However, standard machine learning models are not suitable here. Machine learning models are unable to distinguish causes from effects. Machine learning models neither represent potential actions nor their consequences. Machine learning models only give point estimates rather than uncertainties. Machine learning models often ignore the role of time.

So no control with machine learning? We discuss how at least some of these problems can be alleviated in Chapter 10 and Chapter 12.

5.3 Explain phenomena through counterfactuals

Imagine you study economics. You want to understand how financial crises arise. Your approach is to study historical crises to gain insights into potential future crises. The first thing that comes to your mind is to check the indicators of past crises. Clear signs were plunges in the stock market, increased unemployment rates, or lower gross domestic product (GDP) across countries. All these signs are indicators associated with the crisis. You may even detect them by simply fitting a machine learning model to a dataset containing a broad range of general economic indicators. But is this really what you are after?

Scientists are ultimately interested in why financial crises arose rather than that they did. Would there have been the great depression in the 1930s without the Wall Street Crash of 1929? How did the Yom Kippur War impact the 1973 oil crisis? What was the role of mortgage-backed security in the financial crisis of 2007-2008?

In all these cases, we are interested in the causes that led to certain outcomes. We want to explain rather than just predict. Explanations point to factors that, if they had been different, would have impacted the explained phenomenon. Think of the oil crisis in 1973. To establish that the Yom Kippur War was a cause, we must establish two conditions: 1) factual condition: there was the Yom Kippur War and the oil crisis in 1973; 2) counterfactual condition: if there had not been the Yom Kippur War, there would have been no oil crisis. While the first condition can be easily checked empirically, the second condition is tough.1 No one knows for sure how history turned out to be without the Yom Kippur War. The Organization of Arab Petroleum Exporting Countries (OAPEC) might not have set an embargo or set the embargo later to influence another US policy. Still, scientists can often provide arguments to establish counterfactuals, for example, pointing to other actions of the OAPEC, game-theoretic reasoning, or interviews with OAPEC leaders.

Philosophers of science agree on these key ingredients for explanations:

- Explanandum (pl. explananda): a statement about the phenomenon to be explained. In the oil-crisis example, the explanandum could be the statement ‘There was an oil crisis in 1973.’

- Explanans (pl. explanantia): a statement that if true would explain the explanandum. In the oil-crisis example, the explanans could be the statement ‘The US supported Israel in the Yom Kippur war.’

- Explanatory link: rules or laws describing the mechanism underlying the phenomenon by which the explanans is connected to the explanandum. In the oil-crisis example, the explanatory link could be ‘The OAPEC, who stood on the Yom Kippur war with the Arab states, wanted to change the US policy concerning Israel. The OAPEC leader, particularly King Faisal, believed that the oil could be used as a weapon to force the US to change its policy.’

Scientific models are one approach to obtaining scientific explanations. Scientific models represent the explanandum, the factors referenced in the explanans, and, most importantly, the explanatory link (e.g. the causal relationships) to establish a scientific explanation. Unfortunately, standard machine learning models do not serve this function of scientific models. Machine learning models do not distinguish between causal factors and mere associations. Machine learning models do not describe the real-world mechanism that led to the outcome but only give predictions. Machine learning models work well in static environments but are unreliable if the environmental conditions are altered.

So no explanation with machine learning? We discuss how at least some of these problems can be alleviated in Chapter 8, Chapter 10, and Chapter 11.

5.4 Reason about phenomena via representation

Imagine you are a biologist and want to study how the amino-acid sequence influences the protein structure. You know for a fact that the protein structure is largely determined by its amino-acid sequence. Some researchers developed this amazing deep neural network that is very good at predicting the protein structure. Great, problem solved?!

Well, yes and no. You solved one critical aspect of the problem, you can describe the protein structure and this is what ultimately defines the function of the protein. But it seems your solution is not intelligible to us humans – and ultimately, humans are the entities who are conducting science. Why do certain amino-acid sequences lead to specific predicted protein structures? What are the most important subsequences when it comes to the prediction? How does the prediction model align with theoretical biological knowledge about amino-acid sequences and proteins?

Being able to reason about phenomena is important for two reasons:

- Communication: Humans must be able to teach and share scientific knowledge in schools, universities, and research papers. This allows us as a species to jointly reason about phenomena and collaborate. Moreover, if scientific knowledge informs public decisions, it must be cognitively accessible to a broad public.

- Intuition pump: Intuition drives the questions scientists ask and the experiments they conduct. It allows to draw links to other phenomena or fields, and to traditional scientific models or knowledge. You cannot intuit about phenomena to which you have no cognitive access.

One approach to reason about phenomena is via scientific models. Scientific models provide a language for phenomena. Through variables, functions, and parameters, models deliver a vocabulary (tokens) to talk about phenomenon components, dependencies, and properties respectively [5]. Simplicity and beauty are important in that respect: Simplicity allows humans to communicate effectively and efficiently, and we can emulate phenomenon behavior without running exact simulations; Beauty sparks our curiosity and intuitions, it allows us to learn the scientific language quickly and draw new links.2

Standard machine learning models, like the protein structure prediction model from the example above, are unfortunately terrible reasoning tools. Only very few model elements can be interpreted, such as the input (amino-acid sequence) and the output variables (protein structure). Most other model elements do not allow a simple interpretation, think, for example, of the weights or activation functions in the neural net. Similarly, it is hard to assess how well machine learning models align with our background knowledge and also hard to infuse such knowledge. Instead of simplicity and beauty, machine learning models are designed for high complexity and trained solely under the objective of predictive performance.

So no reasoning with machine learning models? We discuss how at least some of these problems can be alleviated in Chapter 8, Chapter 9, and Chapter 14.

The fact that it is impossible to get data to establish the counterfactual condition is called the fundamental problem of causal inference [4].↩︎

Interestingly, simplicity and beauty seem to be favored not only by humans but also, at least partially, by nature, as evidenced by the overwhelming success of Ockham’s razor in many disciplines when it comes to extrapolation-type predictions.↩︎